The ‘Vision + Robotics’ program at Wageningen University & Research (WUR) focuses on computer vision, robotics, and artificial intelligence (AI). On September 17th, the program’s researchers guided over 120 participants through various projects in small groups. Vakblad Voedingsindustrie was in attendance.

Vision + Robotics brings together experts from multiple WUR fields, including marine, livestock, agriculture, horticulture, and food. “One of our goals is to promote collaboration and innovation between industrial partners, researchers, and the broader community,” said program manager Erik Pekkeriet during his opening remarks. He emphasized the many benefits of robotics in the agrifood sector and the food industry. “While the initial investment can be high, robots offer flexible scalability, higher utilization rates, fewer mistakes, and less downtime due to illness. This results in lower production costs and more consistent product quality. Robotics can also enhance sustainability, for example, through more precise dosing and reduced waste. The food industry constantly faces changing consumer demands and the diversification of sales channels. Robots can help switch between different production processes without compromising efficiency. This is especially relevant at the beginning and end of production lines, such as in dosing and packaging. Ideally, I envision eliminating production lines altogether and moving toward production cells, making our factories more scalable. Beyond production improvements, robots can also be used in areas such as selecting and optimizing high-value raw materials and products, as well as for cleaning and safety inspections.”

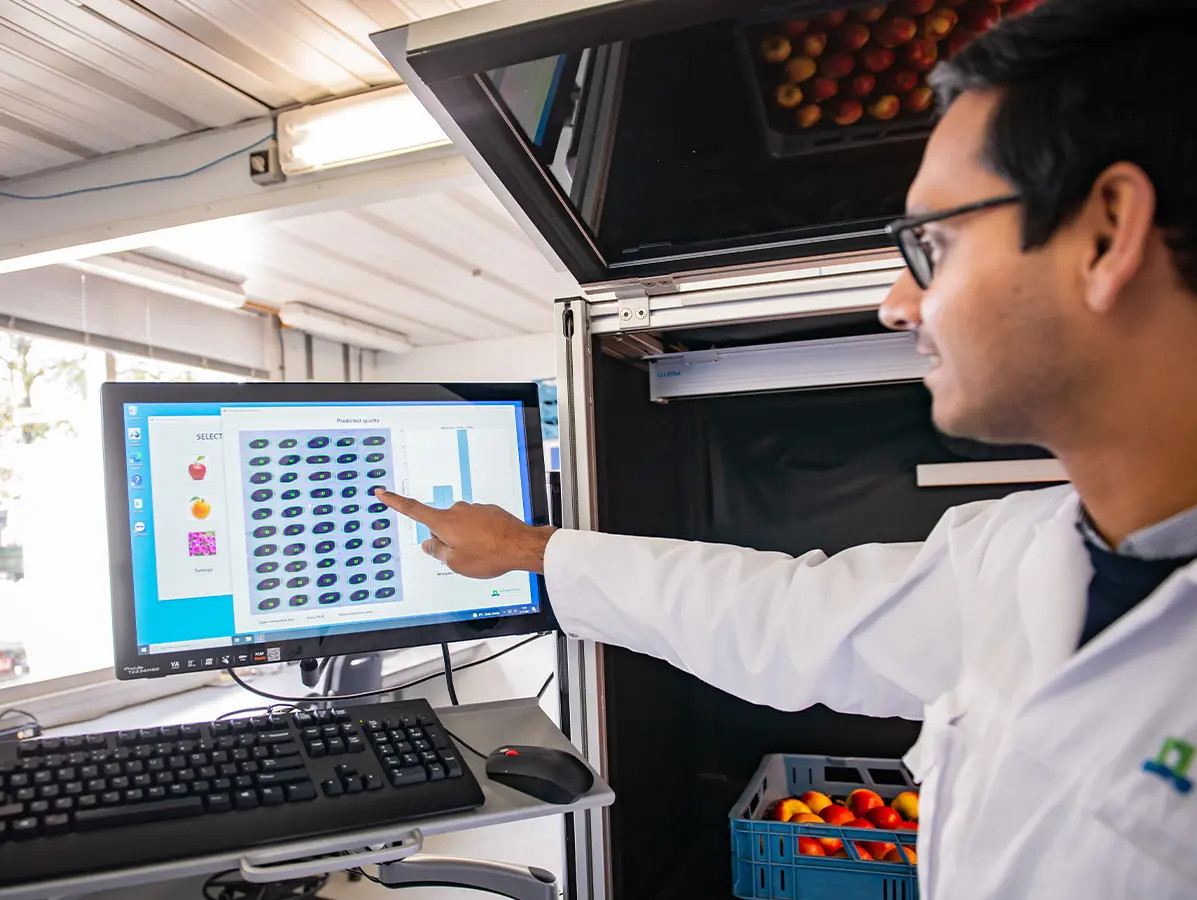

During the tour, we saw the latest developments in computer vision (2D, 3D, and motion tracking), spectral imaging systems (from X-ray to microwaves), artificial intelligence (including deep learning), gripper techniques, and machine integration.

Our group started with a visit to the greenhouse at the Dutch Plant Eco-phenotyping Centre (NPEC). This facility, shared by Utrecht University and Wageningen University & Research (WUR) - and thus also by Vision + Robotics - provides cutting-edge technology for monitoring, analyzing, and controlling plant growth in a fully controlled and manageable greenhouse environment. During experiments and research, often conducted for external clients, plants are transported via an automated system to an enclosed chamber, where each plant is photographed by 15 stationary RGB cameras. This chamber is part of what Vision + Robotics calls the ‘Maxi-MARVIN.’ Within seconds, each plant is 3D scanned.

The resulting images and data allow for the digital, automated dissection of each plant to determine its ‘plant architecture.’ For most plants, this includes the main stem, side branches, and the distance between them, as well as leaf surface area and any fruits. Researchers use this architecture to phenotype the plant: how do environmental factors, combined with genetics, impact the form, growth, and production of plants, like tomatoes? This information is valuable for plant breeders, who aim to reap the benefits of their work as quickly as possible.

The technology behind Maxi-MARVIN is based on a technique known as ‘voxel carving.’ Within seconds, this method generates a 3D point cloud of a plant. This 3D point cloud is of high quality for open plant structures, such as tomato, cucumber, or bell pepper plants, but works less well for compact plants like lettuce. “Such plants basically turn into one big blob.” The next step, as the researchers explain, is to ‘flatten’ the 3D images into 2D images, a process known as reprojection. “One of the many advantages is that it allows you to evaluate the plant from multiple perspectives, resulting in greater accuracy. We also do this because 2D algorithms are currently more advanced than 3D ones.” The digital plants, or digital twins, are also used to run simulations.

To make the most of vision technology and further improve its reliability, sensor fusion is gaining ground; the merging of data from various vision equipment and other sensors. We saw this in action during demonstrations of gripper techniques used for preparing meal salads and picking apples. Packaging seals are automatically inspected using NIR (Near InfraRed spectroscopy), while bone fragments, glass, or metal particles can be detected effectively with X-ray equipment. When combined with a hyperspectral camera or, more recently, microwaves, the data can be further enriched, leading to even greater reliability.

Further along the tour, we were introduced to the art of precise weeding and the role of vision technology and deep learning in this process. Challenges were also discussed, as not everything goes smoothly. Particularly noteworthy was the simulation demonstrating how small flatfish are sorted from a conveyor belt amid a mix of other fish and shells. More fish, shells, and flatfish tumble over each other. The researchers are using synthetic data to teach a robot to identify the different species it sees, even when they are partially hidden or not fully visible. The goal: to separate flatfish from bycatch. In my mind, I translated this to mean that 3D computer vision can apparently determine the shape characteristics of fast-moving agrifood products and digitally reconstruct them. This technology is useful not just for fish, but for all kinds of sorting and packaging processes.

Source: Vakblad Voedingsindustrie 2024